Applying optical flow to Sentinel-2 imagery

There are a lot of satellites gathering a lot of imagery of the earth, day in day out. The government-subsidized and freely available data from Landsat-8 and Sentinel-2 missions have a maximum spatial resolution of 15m (Landsat-8 panchromatic band) and 10m (Sentinel-2 R/G/B/NIR bands), respectively. Free imagery at such a high resolution is pretty cool!

Sentinel-2 has a revisit time of ~5 days, meaning it will view the same plot of land at about the same angle every 5 days. However, the pointing accuracy is never perfect, so the pixels are never exactly in the same place. The question in my mind is: can we use multiple images over time to effectively do "superresolution"?

Getting data¶

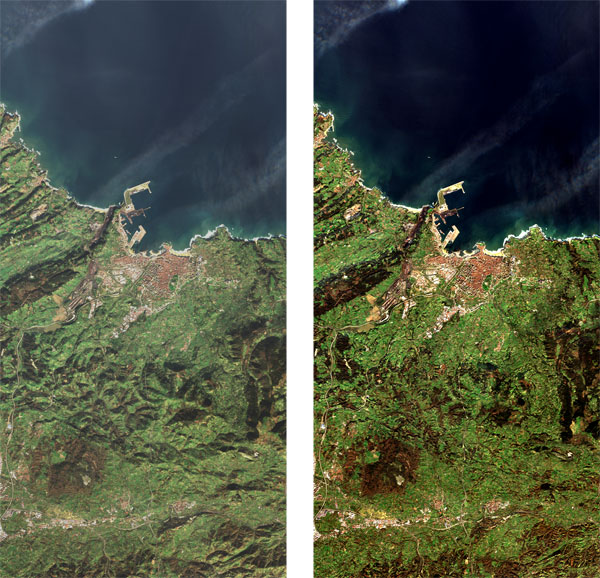

I'm using Level-2A data as this gives me Bottom-of-Atmosphere (BOA) reflectance, while the Level-1C product gives Top-of-Atmosphere reflectance. The TOA reflectance is basically what the satellite "sees", but can be much more "hazy" by going back up through the atmosphere. The BOA reflectance is what we'd estimate the surface reflects, which makes it look less "hazy" and will hopefully reduce differences in images a bit.

Image from ESA

I obtained this imagery through the app at Copernicus Open Access Hub by manually filtering for imagery with a low cloud percentage, centered over Amsterdam. I chose only full tiles where the satellite has approximately the same viewing and solar angle (the satellite is in sun-synchronous orbit, but the hub might show images one swath to the right or left of your selected location). An API is also provided and might be more convenient for downloading large amounts of data.

I unpacked this data and put it in folder sentinel/. The data packages are a bit obtuse but the format is well-described (naming conventions, SAFE format), and they even include free banners!

Free banner included with the free data

import os, re

from glob import glob

from datetime import datetime

from collections import defaultdict

from IPython.display import Image, display

import matplotlib.pyplot as plt

import numpy as np

import xarray as xr

import scipy.ndimage

FIGSIZE = (14, 7)

da = xr.open_rasterio("sentinel/S2B_MSIL2A_20190401T105029_N0211_R051_T31UFU_20190401T140125.SAFE/GRANULE/L2A_T31UFU_A010802_20190401T105221/IMG_DATA/R10m/T31UFU_20190401T105029_B02_10m.jp2")

da

Upon inspection of this one JPEG2000 file for the B band at one timestep, we see that the projection is EPSG:32631, which is UTM zone 31N. Xarray/rasterio has been able to extract this from the embedded metadata. The image is quite large, with sides at over 10k pixels, so I used this tool to get a tight bounding box directly in UTM coordinates which we will use to crop the images to make them a bit easier to work with. (Doing optical flow on 10k x 10k pixels is not much fun.)

# Bounding box in UTM 31N coordinates

extent = dict(x=slice(621954, 640077), y=slice(5811457,5794953))

data_arrays = defaultdict(list)

for p in sorted(glob("sentinel/S2B_MSIL2A_*/GRANULE/*/IMG_DATA/R10m/*_B0*_10m.jp2")):

# How I learned to stop worrying and love the walrus syntax in Python 3.8

if m := re.match("^.*_(.+)_B(0[234])_.*$", p):

dt = datetime.strptime(m.group(1), "%Y%m%dT%H%M%S")

band = int(m.group(2))

# Returns a DataArray

da = xr.open_rasterio(p).sel(**extent)

da.coords["band"] = [band]

da.coords["dt"] = dt

data_arrays[band].append(da)

# Use a nested concat to put these data arrays back together in one big data array

d = xr.concat([xr.concat(data_arrays[k], dim="dt") for k in sorted(data_arrays.keys())], dim="band")

d

One can make many different band combinations for different purposes, we are mostly interested in "natural colors" to make good-looking pictures.

Visualising this imagery in natural colors properly is not entirely straightforward, we'd need to account for human perception, sensor properties and more. I found one exceptionally good paper (code) that explains everything about improving this mapping. The default Sentinel-2 True Color Image (TCI) provided in the data granule uses the basic method of mapping bands 4, 3, 2 to R, G, B and applies some gain and gamma correction in the sRGB space.

I'll just go with the simple method for now, as our constraints here are not so stringent. It just has to look cool.

fig = plt.figure(figsize=FIGSIZE)

v = d.isel(dt=0).transpose("y", "x", "band").sortby("band", ascending=False).values

plt.axis("off")

plt.imshow((v / np.quantile(v.flatten(), .99)).clip(0,1));

All right! We now have multiple high-resolution images of Amsterdam. We'll have to zoom in a bit to see individual pixels.

Estimating subpixel shift using optical flow¶

To find our assumed inaccuracies in sensor pointing between images, we can use optical flow techniques. I had used these algorithms before but never really dove into the math behind it, which actually turns out to be a lot of fun. So let's go into that for a second.

The basic idea of optical flow is to assume that intensity of pixels is constant, and then assume these pixels move a little bit from one to the next frame. That results in this equation, $I_x$ and $I_y$ are the derivative of intensity over both axes, and $V_x$ and $V_y$ are the velocity or... optical flow over both axes:

$$I_x V_x + I_y V_y = - I_t$$This basically says, in my mind, that the sum of x and y intensity gradient of a pixel must be equal to its time derivative of change. Which makes sense, because we'd expect the intensity of a pixel that has very different neighboring pixels (steep gradient) to change a lot when the scene moves.

However, we see that this equation has two unknowns. Even if we know the time rate of change, so if we measure the pixel intensity between two frames, we cannot differentiate between vertical or horizontal movement. We could solve it in a one-dimensional image (row of pixels), but not in two. This is the "aperture problem" and must be overcome by making additional assumptions, which all optical flow algorithms do.

The popular Lucas-Kanade method uses the assumption that all pixels in a neighborhood have a similar flow, and solves the equation above for all pixels in a neighborhood by applying least-squares optimization. This linear system can be represented as (equation shamelessly copied from wikipedia):

$$ \begin{bmatrix} I_x(q_1) & I_y(q_1) \\[10pt] \vdots & \vdots \\[10pt] I_x(q_n) & I_y(q_n) \end{bmatrix} \begin{bmatrix} V_x\\[10pt] V_y \end{bmatrix} = \begin{bmatrix} -I_t(q_1) \\[10pt] \vdots \\[10pt] -I_t(q_n) \end{bmatrix} $$Where $I$ and $V$ are intensity and pixel velocity, as before, and $q$ is a pixel. The system is overconstrained and can only be solved by an optimization technique like least-squares, to find the "most reasonable" motion vector according to some criteria.

The unknown is the $V$ vector, which, if you define a separate $V$ for every pixel (there is one $V$ for all pixels in the equation above), basically forms lines in the x/y space. I am quite interested to see what that looks like!

# Use a fixed order of the axes for easier processing

d2 = d.transpose("dt", "band", "y", "x").astype(np.float32)

FRAME = 0

# The only time it's acceptable to start a variable name with a capital is when making it closer to a math equation

Ix = scipy.ndimage.sobel(d2.isel(band=0, dt=FRAME).values, axis=0).flatten()

Iy = scipy.ndimage.sobel(d2.isel(band=0, dt=FRAME).values, axis=1).flatten()

It = np.diff(d2.isel(band=0, dt=slice(FRAME, FRAME+2)).values, axis=0)[0].flatten()

# Now we make a range of x velocities to match the y velocities and make lines

v_x = np.linspace(-.2, .2, 2)

v_y = -((Ix * v_x[:,np.newaxis]) + It)

# Least-squares solution

A = np.stack([Ix, Iy])

x_lstsq = np.linalg.lstsq(A.T, It, rcond=None)[0]

x_lstsq

x = (np.ones(v_y.shape[1]) * v_x[:,np.newaxis])

N = 10000

plt.plot(x[:,::N], v_y[:,::N], alpha=.3)

plt.plot(*x_lstsq, "ro");

Okay, that does not look very interesting, and it's not very obvious to see the solution point. Maybe a heatmap would work better. Anyhow, let's calculate the optical flow for every image, compared to the first image, to get an idea of the pointing accuracy. We do this over the full image, as our assumption is that the pointing error is constant.

def solve_of(arr):

"Solve 1st-order optical flow equations using least squares"

Ix = scipy.ndimage.sobel(arr[0], axis=0).flatten()

Iy = scipy.ndimage.sobel(arr[0], axis=1).flatten()

It = np.diff(arr[:2], axis=0)[0].flatten()

A = np.stack([Ix, Iy])

s = np.linalg.lstsq(A.T, It, rcond=None)[0]

return s, A, It

arr = []

for i in range(len(d)):

# Sum all bands to lower effect of noise

s, A, It = solve_of(d2.isel(dt=[0, i]).sum(dim="band").values)

arr.append(s)

# Calculate the error decrease when we apply the pixel intensity given by solution

p1 = d2.isel(dt=0).sum(dim="band").values

p2 = d2.isel(dt=i).sum(dim="band").values

err1 = np.abs(p1-p2).mean()

err2 = np.abs(p1+(A.T @ s).reshape(p1.shape) - p2).mean()

print(f"Motion vector for frame 0 to {i+1}:", "{:6.3f} {:6.3f}".format(*s), "; err decrease: {:5.1f} -> {:5.1f}".format(err1, err2))

print("Standard deviation of motion vectors: {:6.3f} {:6.3f}".format(*np.std(np.stack(arr), axis=0)))

Cool, we get reasonable solutions and when we try to adjust pixel intensity, we do get a better solution of the optical flow equation. So at least the found solutions give a reasonable solution to the optical flow equation.

I did a few more experiments (not shown here) using different bands and different pairs of images, and the results there seem to be reasonably consistent, which is good. These pointing errors are quite low though, which is very impressive given that this satellite flies at 786km altitude over the earth's surface. I don't think it's really possible to do anything like superresolution, for which you'd need much larger deviations to be able to "stack" the pixels.

Let's plot this data one more time and then be done with it.

SCALE = 1500

X = 670

Y = 730

SIZE = 100

xslice = slice(X, X + SIZE)

yslice = slice(Y, Y + SIZE)

fig, ax = plt.subplots(nrows=2, ncols=6, figsize=FIGSIZE)

fig.suptitle("True-color imagery and differences between frames")

fig.tight_layout()

for i in range(0, 6):

v = d2.isel(dt=i, x=xslice, y=yslice).transpose("y", "x", "band").sortby("band", ascending=False).values

ax[0, i].axis("off")

# Show original image

ax[0, i].imshow((v / SCALE).clip(0,1))

ax[1, i].axis("off")

if i + 1 < len(d2):

# Difference for summed bands

ax[1, i].imshow((d2.isel(dt=i, x=xslice, y=yslice) - d2.isel(dt=i+1, x=xslice, y=yslice)).sum(dim="band"))

It's quite amazing how well these images overlap. I tried upsampling by 100x and then moving pixels a few rows (according to the optical flow solution) to see if the textures seen in the second row would disappear, but they would not, so I assume these are also simply differences due to different sun positions and reflections.

Conclusion¶

Well, that was fun! It turns out that the pointing accuracy of the SENTINEL-2 MSI instruments is very high, with a standard deviation of just a few decimeters on a distance of 786km. (Assuming our optical flow calculations are correct.) Very impressive.